I moved into a small city where I don't know anyone, and now I want to buy a place. Problem is: there are no listings anywhere, almost no real estate agents, and no updated information on the web.

In a city where you have to call to order deliveries, and Google Maps won't give you their number, how can I find a place to live? Moving to a high-quality neighborhood here would cost way less than in a normal one in a big city, so searching for it would be worth it, especially for a first-time buy

By driving around, I see there are a lot of properties "for sale" announced in a sign with a number and without a real estate agency attached. I could find one by just driving it. But the city is big, with too many streets to go, and too many unmapped dead ends. It has expanded organically. And the high and low infrastructure places are just one street apart.

With Street View, you can see the “good parts” of the town. But that is still too much work and honestly, I can barely remember where I "drove" later.

If there was a way to "cull" neighborhoods I would know where the potential home places are without driving into dangerous places. Afterward, I can narrow my search to only those places.

The plan(TM):

Get all streets in the city area and points equally distributed along the road.

Get all the Street View pictures

Make GPT4 the judge of the neighborhoods.

Plot on a map!

I did not bother with Google Maps at first because it just had too many "false" streets, and would probably cost something. It would take years of my time to download the 30MB on JS bundle of the Google Cloud Console and navigate their menus.

So I asked ChatGPT to generate code for me using OpenStreetMaps. Not gonna lie, I didn't even want to bother reading the code it made: "I just want the .exe" I said. But the ChatGPT got horribly wrong and wouldn't bother fixing it. So like a caveman, I had to read it and fix it.

async function getStreetNetwork(north: number, south: number, east: number, west: number): Promise<OverpassElement[]> {

const url = `http://overpass-api.de/api/interpreter?data=[out:json];way(${south},${west},${north},${east})[highway];out geom;`;

const response = await fetch(url);

const data: OverpassResponse = await response.json();

return data.elements;

}

function getPoints(street: OverpassElement, interval: number, boundingPolygon: Feature<Polygon, GeoJsonProperties>): Point[] {

const points: Point[] = [];

const line = turf.lineString(street.geometry.map((coord: any) => [coord.lon, coord.lat]));

const length = turf.length(line, { units: 'meters' });

for (let i = 1; i <= length / interval; i++) {

const distance = i * interval

const curPoint = turf.along(line, distance, { units: 'meters' });

const angle = calculateAngleAtPoint(line, distance);

if (turf.booleanPointInPolygon(curPoint, boundingPolygon)) {

points.push([curPoint.geometry.coordinates[1], curPoint.geometry.coordinates[0], angle]);

}

}

return points;

}

export default async function getStreetPoints(north: number, south: number, east: number, west: number): Promise<Point[]> {

const points: Point[] = [];

const interval = 70; // meters

// Define the coordinates of the bounding box

const coordinates = [

[

[west, north], // Top-left corner

[east, north], // Top-right corner

[east, south], // Bottom-right corner

[west, south], // Bottom-left corner

[west, north] // Closing the polygon by returning to the starting point

]

];

// Create the polygon using Turf.js

const boundingPolygon = turf.polygon(coordinates);

const streets = await getStreetNetwork(north, south, east, west);

for (const street of streets) {

points.push(...getPoints(street, interval, boundingPolygon));

}

// Filter near 40m

const filteredPoints = filterCloseGPSPoints(points, 40);

for (const point of filteredPoints) {

console.log(`[${point[0]}, ${point[1]}, ${point[2]}],`);

}

return filteredPoints;

}

For the roads I marked the points at every 70m, in imperial units that is 0.76 football fields, I had a “bug” that every point at the intersection on the road was marked. But it was due that the intersection is the "beginning of the road". Duh!

2. Getting the Street View photos

It is super duper expensive now to use Google APIs. And Playwright is easier than reading Google documentation, so I am cashing the favor for contributing long enough to Google Maps.

One huge tip is to make the dimension of the image a multiple of 512 for OpenAI, a 512x512 image costs 4x less than a 513 x 513 one. It says in the documentation that it splits the image in 512 x 512 chunks, or 85 tokens each, we do 1024x512, so it is wide and only 2 tiles!

// Generate 2 angles to capture

const points = pointCloud.map(([lat, lng, deg]) => {

return [

[lat, lng, deg % 360],

[lat, lng, (deg + 180) % 360],

];

}).flat();

// Set up Playwright Chromium browser

const browser = await chromium.launch({headless: false});

// So we spend only 2 tiles!

const context = await browser.newContext({viewport: {width: 1024, height: 512}});

const promises = points.map(([lat, lng, deg]) => limit(() => getPhoto(context, lat, lng, deg)));

await Promise.all(promises);

// Screenshot code

const filename = `screenshots/screenshot_${lat}=${lng}=${deg}`.replace(/\./g, '_') + ".png";

if (fs.existsSync(filename)) {

console.log(`Skipping ${filename}.png`);

return fs.readFileSync(filename);

}

const page = await context.newPage();

const url = `http://maps.google.com/maps?q=&layer=c&cbll=${lat},${lng}&cbp=11,${deg},0,0,0`;

console.log(`Navigating to: ${url}`);

await page.goto(url);

// Wait for the page to load (wasteful, I know)

await page.waitForTimeout(5000);

// Run the script to delete children except for 'id-scene'

await page.evaluate(() => {

const container = document.querySelector('.id-content-container');

if (container) {

container.querySelectorAll(':scope > :not(.id-scene)').forEach(child => child.remove());

}

});

// Take the screenshot

const screenshot = await page.screenshot({ path: filename, fullPage: true, type: 'png' });

After the images were downloaded I spent hours promptly writing and got mad that the first short draft I made was the best one. GPT4 gets biased if you give too many details, like if it is Brazil mentioned, it starts to sugarcoat how bad the street is:

"This precarious street without sidewalk […] is typical of a Brazilian suburb - I give 4 / 5" - GPT with more context. Was that a roast? Was the AI being ironic?

If you add what factors to consider to the prompt, it will hyperfocus on looking for a positive aspect in the input. A street with an abandoned lot was given credit for its “greenery”. Probably ChatGPT has instructions for it to be positive with what you give in the prompt and this case is not ideal.

Another good surprise is that the new structured output is awesome, you don’t need to YELL at the AI to not over-explain things, or safeguard it, and it spared a lot of time not calibrating the prompt.

const images: ChatCompletionContentPartImage[] = photosPath.map(file => {

const image = typeof file === "string" ?

convertImageToBase64(path.join(SCREENSHOT_DIR, file)) :

file.toString("base64");

return {

type: "image_url",

image_url: {

url: `data:image/jpeg;base64,${image}`,

}

};

});

const completion = await openai.beta.chat.completions.parse({

model: "gpt-4o-2024-08-06",

messages: [

{

role: "system",

content: `

Your task involves receiving street view images and classifying the neighborhood infrastructure on a scale from 0 to 5.

You will evaluate the neighborhood based on the average quality of its housing and pricing and translate that to a level.

A low level (0) might represent a street with dirt roads and unfinished houses with exposed bricks.

A high level (5) would indicate a well-developed area with asphalt roads and high-quality houses.

`

},

{

role: "user", content: images,

},

],

response_format: {

type: "json_schema",

json_schema: schema

},

});

if (completion.choices[0].message.refusal) {

throw new Error(`API refused to rate pointPhotos: ${lat}, ${lng}`);

}

return completion.choices[0].message.parsed as any as Response;

3. Mo data mo problems

Last thing, there are some loose spots, where there are some few good houses in an overall bad street. Those are not ideal candidates, I used something similar to KNN to remove that "noise". Yes, I made a O(n**2) code since my time is worth more than the energy that my Mac will use.

// Function to find the 4 nearest neighbors of a given point

function findNearestNeighbors(point: { lat: number, lng: number }, points: any[], k: number = 4): any[] {

return points

.map(p => ({

...p,

distance: calculateDistance(point, p)

}))

.sort((a, b) => a.distance - b.distance)

.slice(1, k + 1); // Ignoring the first one because it will be the point itself

}

// Function to modify points based on nearest neighbors

function adjustRatings(ratings: any[]): any[] {

return ratings.filter(point => point.rating != -1).map(point => {

const nearestNeighbors = findNearestNeighbors(point, ratings);

const maxNeighborRating = Math.max(...nearestNeighbors.map(p => p.rating));

if (nearestNeighbors.every(neighbor => neighbor.rating !== point.rating) && point.rating > maxNeighborRating) {

return { ...point, rating: maxNeighborRating };

}

return point;

});

}Overall it was great enough, looking at the rating and the images in a vacuum like the AI did I would rate them the same as well. There were no disagreements between me and AI, which would invalidate the whole thing.

I also removed the beginning of the street in my code and removed nearby points to save some tokens.

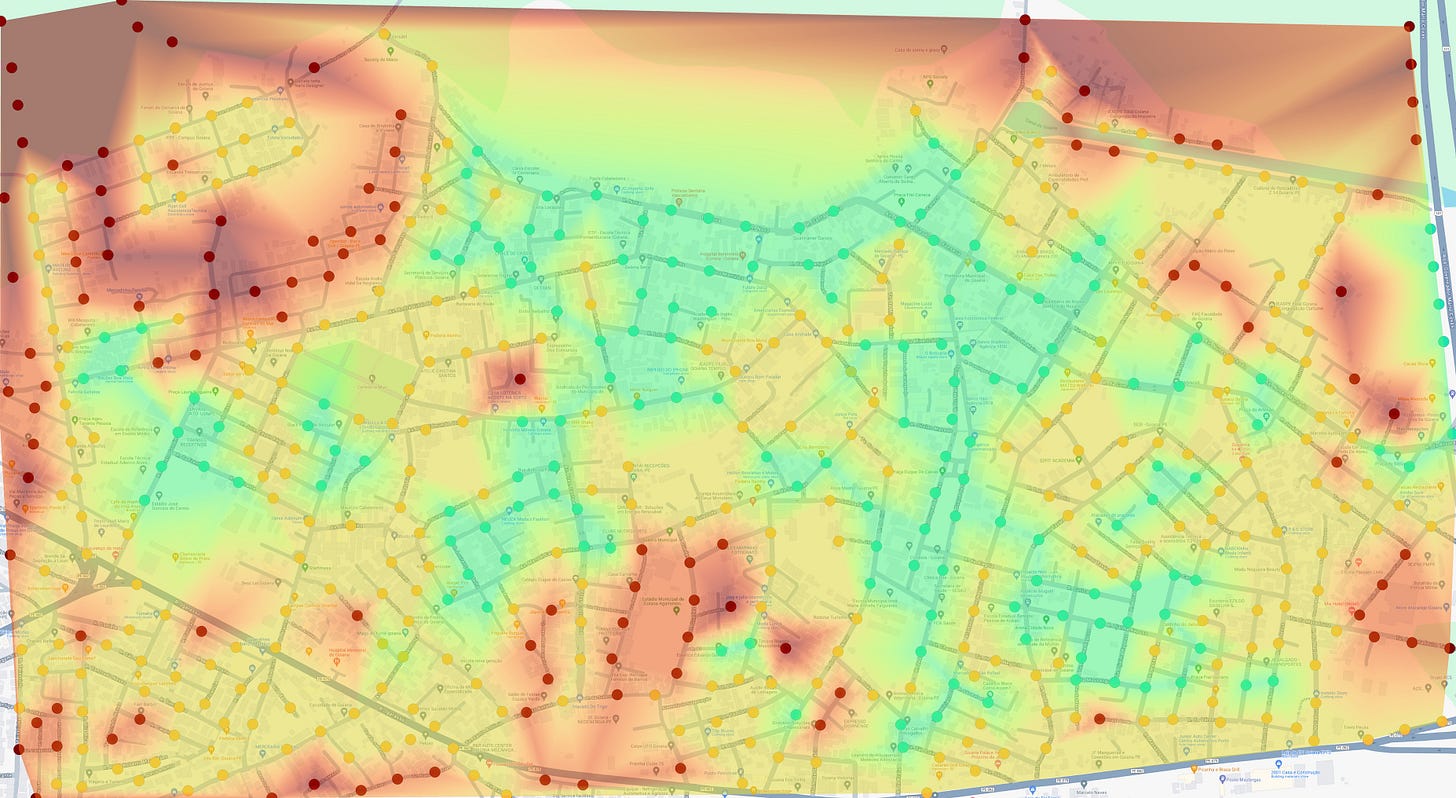

Here is the final thing, using QGIS and TIM interpolation among points:

Insights

The good news was every place got at least 1, despite telling it to rate from 0-5, there were no legit zeros given. Even on muddy roads with large vegetation. But that was a good thing, it independently decided to reserve 0 to "not found" images and I could easily filter those out.

The bad news was that no place got a 5, the one that got a 4 was removed by the KNN, which is good for the quality of the rating but bad news for the city overall. I think a 4-5 would be a posh American suburbia with an HOA and lots of grass lawns and a large asphalt road with no overhead wires, and we have that here.

A surprise was how consistent it was to judge according to its own parameters, all the prompting was done in isolation, but remained the same across nearby road spots. There were rarely sudden jumps between 1 to 3 for example.

Another surprise was that the AI loved parks, it could have the saddest playground ever, if there was a park it got at least a 1-point bump, but that makes sense, a park is a “permanent view" of some sort.

What's next

Now I need to visit those places and drive around to see what is there to sell, next steps might be getting a 360 camera, putting it on the roof of the car, a lá street view, and taking pictures and looking for "for sale signs". Stay tuned!