Why AI for coding is so polarizing

One camp sees a productivity revolution, the other sees a pile of garbage code. Both might be right.

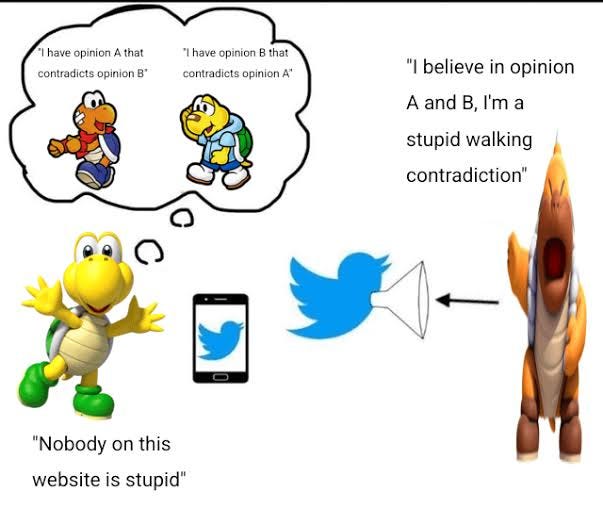

If you spend any time online, you've probably seen the wildly different opinions on using LLMs in coding. On one side, Twitter bros bragging about how they built “a $1k revenue app in just 10 days using AI”.

On the other hand, engineers who refuse to use any LLM tool at all. You'll find them in every thread, insisting that AI sucks, produces garbage code, and only adds to technical debt.

Joking aside, some people use AI to do great things daily, while others have problems with it and have given up. The difference is context.

Why are there contradictions?

An LLM has no sapience. Everything the AI cooks up is a product of its training corpus, fine-tuning, and a system + user prompt. (with a bit of randomness for seasoning).

No matter how clever your prompt is, the training data is its foundation. This is why companies are so aggressively scraping the web. If you create a new language tomorrow called FunkyScript, the AI will be terrible at it, regardless of your prompt.

This explains the different experiences of AI detractors and champions. On the one hand, you have people new to coding working on greenfield projects with popular tools like Tailwind and React (which have a massive training corpus).

On the other hand, you have engineers working with more niche tools. A great example is CircleCI’s YAML configuration. Since CircleCI has documentation that's difficult for an AI to ingest (because it sucks). So the AI starts hallucinating and spitting out code for GitHub Actions instead.

The Context problem

Then there's the context window, the "short-term memory" of the AI. It's a known issue that the more context you stuff into a prompt, the "dumber" the model can get.

When you're working on a greenfield project, there are no existing files or dependencies, so you don't need to provide much context, which saves you from spending tokens on it.

But greenfield projects aren't the norm. The norm is a legacy codebase built by multiple people who changed many parts and then left the company. Some of it has parts that don't make sense even to a human, much less to an LLM.

All this extra context weighs down the LLM tokens. Consider the same prompt: "Change all the colors to blue on my Auth page." In a new project, the AI can probably find and handle the relevant files.

But on a mature codebase, that auth page is tied to a color system, part of a larger design system. Now the AI is in trouble. Throw in some unit tests that will inevitably break, and the AI is completely lost.

"Hey AI, you broke this stuff" — You say, thinking you are not using AI enough

Then the AI sycophantly replies:

"You are absolutely right! Let me try another approach!"

Now you're the one in trouble. It's time to shut the AI down and salvage what you can from the wreckage.

Partial Solution - “Fine-tuning” instructions

This isn't a perfect fix, but there is a strategy to make the AI less destructive and, eventually, genuinely helpful. You'll have to decide if the upfront effort is worth it compared to manually coding. It won't be worth it for the FunkyScript codebase, but I succeeded on niche stacks, like Mobile E2E.

In complex codebases, an AI must learn your project's unique patterns with every prompt. The solution is to give it that knowledge upfront, rather than making it rediscover everything at "runtime."

Having a good CLAUDE.md, for example, which an LLM can read before performing a task, helps the AI understand what makes your project different from its base model. Your CLAUDE.md is not for you to say “do it right, stop making it wrong” like a lot of people do.

We can even use the AI itself to help. Here is an example prompt. You should provide more high-level context for a real project, especially if your README.md sucks.

You are a senior engineer onboarding another senior engineer to our codebase. Analyze the provided files at a high level. Study its structure and patterns, then write a document explaining how to work on it. Highlight the parts that differ from common industry patterns for this language and framework.

For example, do you use Bun instead of npm? Inline styles instead of CSS? These are crucial details the model needs to know; otherwise, it will default to the most common patterns in its training data.

So, the next time someone gives an opinion on AI that differs from yours, maybe don't immediately jump to arguing. They aren't necessarily doomers who will be replaced, nor are they grifters selling snake oil. Consider that not every engineer works on your stack / codebase.

…. or maybe they are all koopas: